comparing the incomparible - #devcamp16 vs. #swec16

Lucky me, living in the metropolitan area of Nuremberg, … this year I attended three different developer-oriented open spaces hosted within one hour driving range from home.

- devops camp in Nuremberg, six iterations so far, first one back in March 2014

And starting this year there are now two developer-centric events (both more or less claiming to be the first one):

- developercamp in Würzburg, back in September 2016

- swecamp in Tennenlohe/Erlangen, right yesterday & today

At the closing event of swecamp one participant mentioned that he was from Würzburg and also attended the developercamp… and that swecamp was really cool yet not on par with developercamp. Unfortunately he wasn’t willing to elaborate on what caused his feeling, but it kindof struck me … I couldn’t really answer the question which of those two camps was better (and remained quiet during the retrospective)…

But it kept bothering me and I had some time to think on may way home, … however I still cannot tell which one was “better”, mainly since it doesn’t feel right to compare the incomparible. After all both events attracted (slightly) different kinds of people. And as barcamps are largely shaped by their participators it’s due to this fact that both events felt different…

developercamp was organized by Mayflower which is a project & consulting agency in PHP & JavaScript field, also doing some Agile consulting, etc. … they mainly attracted (and this is personal gut feeling, not statistics) Webbish people, mostly developers but also a lot of product owners. Most attendees had an e-commerce background, be it a webshop, some shop software itself (Benjamin from Shopware was there) or some middleware provider. Topics were manifold, from What’s the job of a software architect? (which was interesting with half the audience from an Agile background and the other half from a more waterfallish one) over various Product Owner and discovery focussed stuff to dev-centric like Christopher’s TypeScript Game Engine Primer. Yet most sessions felt like fitting into my personal PHP/JavaScript/CleanCode filter bubble.

Opposed to that swecamp was organized by methodpark which also does projects and consulting, yet in more enterprisy contexts: medical devices and automotive. They managed to attract people from a (perceived) broader range of backgrounds … of course there were many of their own employees (Java, Embedded), more Java and C#, C++ and embedded devs from companies nearby … and of course also some Webbies (like me). The session topics were a bit more focussed on the engineering part and theoretical (read: language agnostic and less hands-on-code oriented) and a bit more testing. There were no Product Owner things and interestingly no operations stuff (Puppet, Ansible et al) and IoT was more of a topic (unfortunately I completely missed the IoT hack space)

What was especially cool with swecamp was that they had Susume Sasabe from Japan as a special guest, who did a comparison of DEV culture in Japan and Germany. He also spoke about Kaizen, different approaches to knowledge transfer, different problem solving methods etc. … all in all enriching the whole event every here and there. Besides that I really enjoyed @NullPlusEins’s sessions on (developer) psychology.

I also happily noticed that CQRS and Event Sourcing were a topic on both events, maybe a little bit more focussed on DDD at swecamp. This isn’t too surprising because of all the microservices buzz (which also was a hot topic on both events). Again serverless was not a topic, meh.

Last but not least swecamp was hosted in methodpark’s office which was way cosier and more comfortable, more decorated than the university building in Würzburg (where developercamp took place).

To sum it up, both events have their unique points. I really enjoyed developercamp (as a Web developer) and also swecamp, both had some sessions that really resonated with me (and after all that’s the main reason for me to go to unconferences: learning about things I don’t know that I don’t know them). I’m so happy that I don’t have to pick a single event to go to next year. Both organizers already told that they’ll follow up with a second iteration (and I’m more then willing to go to both of them)

PS: as a funny note, the devops camp site is the only one hosted with plain, unencrypted HTTP. Both more developer focussed events feature SSL :-)

PPS: developercamp also hosts on IPv6 and their hosting also supports Forward Secrecy, so let’s consider it better. SCNR :-)

Customizing my Keyboard Layout on NixOS

UPDATE: The exact methodology shown here does not work anymore. See here for an updated version.

Here’s one missing write-up on a problem I immediately faced after reinstalling my Laptop with NixOS: my customized keyboard layout was missing. I’m using Programmer Dvorak for a prettly long time now. But I usually apply two modifications:

- rearrange the numbers 0..9 from left to right (as on a “normal” keyboard, just using shift as modifier key)

- add German umlauts to A, O and U keys (on modifier level 3)

… and use AltGr as modifier key to access level 3.

On Ubuntu I had a Puppet module around that used some augeas rules to

patch /usr/share/X11/xkb/symbols/us as well as evdev.xml along it.

There are several problems with this on NixOS after all: I don’t have

puppet modifying system state, but the system is immutable. And after

all there’s simply no such file :)

So pretty obviously modifying the keyboard layout is more involved on

NixOS. I pretty quickly came to the conclusion that I would have to

patch xkeyboard-config sources and use some form of package override.

Yet I had to tackle some learning curve first …

- you cannot directly override

xorg.xkeyboardconfig = ...as that would “remove” all the other stuff from belowxorg. The trick is to override xorg with itself and merge in a (possibly recursive) hash with a changed version ofxkeyboardconfig. - overriding

xorg.xkeyboardconfigcompletely also turned out to be a bad idea as its indirectly included in almost every X.org derivation (sonixos-rebuildwanted to recompile LibreOffice et al – which I clearly didn’t want it to do) - almost close to frustration I found this configuration.nix Gist where someone obviously tries to do just the same – but the Gist doesn’t have many searchable terms (actually it’s just code), so it was really hard to find :) … his trick is to use overrideDerivation based on

xorg.xkeyboardconfigbut store it in a different variable. Then derivexorgserver,setxkbmapandxkbcompand just use the modified xkeyboard configuration there

So here’s my change to /etc/nixos/configuration.nix:

nixpkgs.config.packageOverrides = super: {

xorg = super.xorg // rec {

xkeyboard_config_dvp = super.pkgs.lib.overrideDerivation super.xorg.xkeyboardconfig (old: {

patches = [

(builtins.toFile "stesie-dvp.patch" ''

Index: xkeyboard-config-2.17/symbols/us

===================================================================

--- xkeyboard-config-2.17.orig/symbols/us

+++ xkeyboard-config-2.17/symbols/us

@@ -1557,6 +1557,34 @@ xkb_symbols "crd" {

include "compose(rctrl)"

};

+partial alphanumeric_keys

+xkb_symbols "stesie" {

+

+ include "us(dvp)"

+ name[Group1] = "English (Modified Programmer Dvorak)";

+

+ // Unmodified Shift AltGr Shift+AltGr

+ // symbols row, left side

+ key <AE01> { [ ampersand, 1 ] };

+ key <AE02> { [ bracketleft, 2, currency ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE03> { [ braceleft, 3, cent ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE04> { [ braceright, 4, yen ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE05> { [ parenleft, 5, EuroSign ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE06> { [ equal, 6, sterling ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+

+ // symbols row, right side

+ key <AE07> { [ asterisk, 7 ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE08> { [ parenright, 8, onehalf ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE09> { [ plus, 9 ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE10> { [ bracketright, 0 ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE11> { [ exclam, percent, exclamdown ], type[Group1] = "FOUR_LEVEL_ALPHABETIC" };

+ key <AE12> { [ numbersign, grave, dead_grave ] };

+

+ // home row, left side

+ key <AC01> { [ a, A, adiaeresis, Adiaeresis ] };

+ key <AC02> { [ o, O, odiaeresis, Odiaeresis ] };

+ key <AC04> { [ u, U, udiaeresis, Udiaeresis ] };

+};

partial alphanumeric_keys

xkb_symbols "sun_type6" {

Index: xkeyboard-config-2.17/rules/evdev.xml.in

===================================================================

--- xkeyboard-config-2.17.orig/rules/evdev.xml.in

+++ xkeyboard-config-2.17/rules/evdev.xml.in

@@ -1401,6 +1401,12 @@

</variant>

<variant>

<configItem>

+ <name>stesie</name>

+ <description>English (Modified Programmer Dvorak)</description>

+ </configItem>

+ </variant>

+ <variant>

+ <configItem>

<name>rus</name>

<!-- Keyboard indicator for Russian layouts -->

<_shortDescription>ru</_shortDescription>

'') # }

];

});

xorgserver = super.pkgs.lib.overrideDerivation super.xorg.xorgserver (old: {

postInstall = ''

rm -fr $out/share/X11/xkb/compiled

ln -s /var/tmp $out/share/X11/xkb/compiled

wrapProgram $out/bin/Xephyr \

--set XKB_BINDIR "${xkbcomp}/bin" \

--add-flags "-xkbdir ${xkeyboard_config_dvp}/share/X11/xkb"

wrapProgram $out/bin/Xvfb \

--set XKB_BINDIR "${xkbcomp}/bin" \

--set XORG_DRI_DRIVER_PATH ${super.mesa}/lib/dri \

--add-flags "-xkbdir ${xkeyboard_config_dvp}/share/X11/xkb"

( # assert() keeps runtime reference xorgserver-dev in xf86-video-intel and others

cd "$dev"

for f in include/xorg/*.h; do # */

sed "1i#line 1 \"${old.name}/$f\"" -i "$f"

done

)

'';

});

setxkbmap = super.pkgs.lib.overrideDerivation super.xorg.setxkbmap (old: {

postInstall =

''

mkdir -p $out/share

ln -sfn ${xkeyboard_config_dvp}/etc/X11 $out/share/X11

'';

});

xkbcomp = super.pkgs.lib.overrideDerivation super.xorg.xkbcomp (old: {

configureFlags = "--with-xkb-config-root=${xkeyboard_config_dvp}/share/X11/xkb";

});

};

};

After a nixos-rebuild switch I was able to setxkbmap us stesie to have

my modified layout loaded. Last but not least I switched the default

keyborad layout in configuration.nix like so:

services.xserver.layout = "us";

services.xserver.xkbVariant = "stesie";

services.xserver.xkbOptions = "lv3:ralt_switch";

NixOS, PHP, PHPUnit & PhpStorm

Since one of my goals with NixOS was to conveniently install multiple PHP

versions side by side (and without the hassle to compile manually with

--prefix=/opt/php-something/ flags) I’ve finally tried to get a feasible

setup.

For the moment I decided to go with “central” (i.e. not-per-project)

installations within my $HOME as I don’t want to set up a whole

environment just to do a Kata every once in a while.

Installing PHP 7.0

This should actually be pretty straight forward since PHP 7.0 as well as xdebug are both part of official nixpkgs … and once you know what to do it actually is :)

Obviously we need to create our own derivation and then can simply use

buildEnv to symlink our buildInputs to the output directory. However

the PHP binary has the extension path baked in (the one to its own nix store),

so we have to overwrite that one. This can be done using makeWrapper script

and simply provide -d extension_dir=... flag before anything else.

In order to be able to do that we first need to fiddle with the /bin directory

though, as only the php70 build input has a /bin directory and buildEnv

hence simply links the whole $php70/bin directory to $out/bin.

I decided to put the PHP 7.0 environment to $HOME/bin/php70, which I

mkdir‘ed first and then created a default.nix like this:

with import <nixpkgs> { };

stdenv.mkDerivation rec {

name = "php70-env";

env = buildEnv {

inherit buildInputs name;

paths = buildInputs;

postBuild = ''

mkdir $out/bin.writable && cp --symbolic-link `readlink $out/bin`/* $out/bin.writable/ && rm $out/bin && mv $out/bin.writable $out/bin

wrapProgram $out/bin/php --add-flags "-d extension_dir=$out/lib/php/extensions -d zend_extension=xdebug.so"

'';

};

builder = builtins.toFile "builder.sh" ''

source $stdenv/setup; ln -s $env $out

'';

buildInputs = [

php70

php70Packages.xdebug

makeWrapper

];

}

(maybe there’s a more elegant solution to replace this link with a directory of symlinks, … but I haven’t found out yet. Please tell me in case you know how to do it properly)

… then after running nix-build you should have a ./result/bin/php

which is a shell script wrapping the actual php binary to automatically

load xdebug.so.

Installing PHP 7.1 (beta 3)

This is of course a little bit more involved as PHP 7.1 isn’t yet part of nixpkgs, since it’s not really released yet.

The installation (including compilation) of PHP 7.1 can be achieved by

simply creating an overrideDerivation of pkgs.php70 with name, version

and src adapted as needed.

… the xdebug PECL package from nixpkgs cannot simply be re-used as the latest release doesn’t support PHP 7.1 as of yet. But creating a derivation for an PECL package from scratch isn’t complicated, so let’s just do that manually :)

So here’s the php71/default.nix file:

with import <nixpkgs> { };

let

php71 = pkgs.lib.overrideDerivation pkgs.php70 (old: rec {

version = "7.1.0beta3";

name = "php-${version}";

src = fetchurl {

url = "https://downloads.php.net/~davey/php-${version}.tar.bz2";

sha256 = "02gv98xaal8pdr1yj57k2ns4v8g53iixrz4dynb5nlr81vfg4gwi";

};

});

php71_xdebug = stdenv.mkDerivation rec {

name = "php-xdebug-55fccbb";

src = fetchgit {

url = "https://github.com/xdebug/xdebug";

rev = "55fccbbcb8da0195bb9a7c332ea5364f58b9316b";

sha256 = "15wgvzf7l050x94q0a62ifxi5j7p9wn2f603qzxwcxb7ximd9ffb";

};

buildInputs = [ php71 autoreconfHook ];

makeFlags = [ "EXTENSION_DIR=$(out)/lib/php/extensions" ];

autoreconfPhase = "phpize";

preConfigure = "touch unix.h";

};

in

stdenv.mkDerivation rec {

name = "php71-env";

env = buildEnv {

inherit buildInputs name;

paths = buildInputs;

postBuild = ''

mkdir $out/bin.writable && cp --symbolic-link `readlink $out/bin`/* $out/bin.writable/ && rm $out/bin && mv $out/bin.writable $out/bin

wrapProgram $out/bin/php --add-flags "-d extension_dir=$out/lib/php/extensions -d zend_extension=xdebug.so"

'';

};

builder = builtins.toFile "builder.sh" ''

source $stdenv/setup; ln -s $env $out

'';

buildInputs = [

php71

php71_xdebug

makeWrapper

];

}

The touch unix.h thing is actually a hack needed to compile the extension

properly as php’s config.h has the HAVE_UNIX_H flag defined, which should

actually tell whether the system has a unix.h file (which old Unix

systems obviously once had, Linux systems don’t). However the imap

client library has a unix.h file which tricks php’s configure script

to note having one :) … and as we (obviously) don’t have imap library

as build input php.h’s #include would fail without that touch…

PHPUnit & PhpStorm

PHPUnit isn’t packaged in nixpkgs, but after all it’s just a phar archive file

and we’d like to run it with different PHP versions anyways, therefore I’ve

just downloaded it and stored it in $HOME/bin also.

Configuring PhpStorm is pretty straight forward, just go to File >

Default Settings > Languages & Frameworks > PHP and click the

three-dots-button next to Interpreter. Then simply add both PHP executables

at $HOME/bin/php70/result/bin/php and $HOME/bin/php71/result/bin/php.

PhpStorm should automatically find out about the php.ini file as well

as the Xdebug extension.

… last but not least go to PHPUnit config folder, choose Path to phpunit.phar and point it to the downloaded phar archive.

… and now you’re set :) Just select one of the interpreters and run your tests (and then switch interpreters to your liking).

Building a Jekyll Environment with NixOS

So there is this idea with NixOS to install only the very base system in the global environment and augment these using Development Environments. And as I’m creating this blog using Github Pages aka Jekyll, writing in Markdown, and would like to be able to preview any changes locally, I of course need Jekyll running locally. Jekyll is even on nixpkgs, … but there are Jekyll plugins which aren’t bundled with this package and essential for correct rendering of e.g. @-mentions and source code blocks.

… so the obvious step was to create such a NixOS Development Environment, which has Ruby 2.2, Jekyll and all the required plugins installed. Turns out there even is a github-pages Gem, so we just need to “package” that. Packaging Ruby gems is pretty straight forward actually, …

so first let’s create a minimal Gemfile first:

source 'https://rubygems.org'

gem 'github-pages'

The Github page has this , group: :jekyll_plugins thing in it, … I had to remove it, otherwise

nix-shell complains that it cannot find the Jekyll gem file, once you try to run it (later).

Then we need to create Gemfile.lock by running bundler (from within a nix-shell that has bundler):

$ nix-shell -p bundler

$ bundler package --no-install --path vendor

$ rm -rf .bundler vendor

$ exit # leave nix-shell

… and derive a Nix expression from Gemfile.lock like so (be sure to not

accidentally run this command from within the other nix-shell, which would fail

with strange SSL errors otherwise):

$ $(nix-build '<nixpkgs>' -A bundix)/bin/bundix

$ rm result # nix-build created this (linking to bundix build)

… and last but not least we need a default.nix file which actually triggers the environment

creation and also automatically starts jekyll serve after build:

with import <nixpkgs> { };

let jekyll_env = bundlerEnv rec {

name = "jekyll_env";

ruby = ruby_2_2;

gemfile = ./Gemfile;

lockfile = ./Gemfile.lock;

gemset = ./gemset.nix;

};

in

stdenv.mkDerivation rec {

name = "jekyll_env";

buildInputs = [ jekyll_env ];

shellHook = ''

exec ${jekyll_env}/bin/jekyll serve --watch

'';

}

Take note of the exec in shellHook which actually replaces the shell which nix-shell is about

to start by Jekyll itself, so once you stop it by pressing C-c the environment is immediately

closed as well.

So we’re now ready to just start it all:

[stesie@faulobst:~/Projekte/stesie.github.io]$ nix-shell

Configuration file: /home/stesie/Projekte/stesie.github.io/_config.yml

Source: /home/stesie/Projekte/stesie.github.io

Destination: /home/stesie/Projekte/stesie.github.io/_site

Incremental build: enabled

Generating...

done in 0.147 seconds.

Auto-regeneration: enabled for '/home/stesie/Projekte/stesie.github.io'

Configuration file: /home/stesie/Projekte/stesie.github.io/_config.yml

Server address: http://127.0.0.1:4000/

Server running... press ctrl-c to stop.

On Replacing Ubuntu with NixOS (part 1)

After I heard a great talk (at FrOSCon) given by @fpletz on NixOS, which is a Linux distribution built on top of the purely functional Nix package manager, … and I am on holiday this week … I decided to give it a try.

So I backed up my homedir and started replacing my Ubuntu installation, without much of a clue on NixOS … just being experienced with other more or less custom Linux installations (like Linux From Scratch back in the days, various Gentoo boxes, etc.)

Here’s my report and a collection of first experiences with my own very fresh installation, underlining findings which seem important to me. This is the first part (and I intend to add at least two more: one on package customisation and one on development environments)…

Requirements & Constraints

- my laptop: Thinkpad X220, 8 GB RAM, 120 GB SSD

- NixOS replacing Ubuntu (preserving nothing)

- fully encrypted root filesystem & swap space (LUKS)

- i3 improved tiling window manager among screen locking et al

Starting out

First read NixOS’ manual, at least the first major chapter (Installation) and the Wiki page on Encrypted Root on NixOS.

On NixOS’ Download Page there are effectively two choices, a Graphical live CD and a minimal installation. The Thinkpad X220 doesn’t have a CD-ROM drive, and the only USB stick I could find was just a few megabytes too small to fit the graphical live cd … so I went with the minimal installation …

First steps are fairly common, using fdisk to create a new partition table, add a small /boot

partition and another big one taking the rest of the space (for LUKS). Then configure LUKS like

$ cryptsetup luksFormat /dev/sda2

$ cryptsetup luksOpen /dev/sda2 crypted

… and create a LVM volume group from it + three logical volumes (swap, root filesystem and /home)

$ pvcreate /dev/mapper/crypted

$ vgcreate cryptedpool /dev/mapper/crypted

$ lvcreate -n swap cryptedpool -L 8GB

$ lvcreate -n root cryptedpool -L 50GB

$ lvcreate -n home cryptedpool -L 20GB

… last not least format those partitions …

$ mkfs.ext2 /dev/sda1

$ mkfs.ext4 /dev/cryptedpool/root

$ mkfs.ext4 /dev/cryptedpool/home

$ mkswap /dev/cryptedpool/swap

… and finally mount them …

$ mount /dev/cryptedpool/root /mnt

$ mkdir /mnt/{boot,home}

$ mount /dev/sda1 /mnt/boot

$ mount /dev/cryptedpool/home /mnt/home

$ swapon /dev/cryptedpool/swap

Initial Configuration

So we’re now ready to install, … and with other distributions we would now just launch the installer application. Not so with NixOS however, it expects you to create a configuration file first … to simplify this it provides a small generator tool:

$ nixos-generate-config --root /mnt

… which generates two files in /mnt/etc/nixos:

-

hardware-configuration.nixwhich mainly lists the required mounts -

configuration.nix, after all the config file you’re expected to edit (primarily)

The installation image comes with nano pre-installed, so let’s use it to modify the

hardware-configuration.nix file to amend some filesystem options:

- configure root filesystem to not store access times and enable discard

- configure

/hometo support discard as well

… so let’s add option blocks (and leave the rest untouched):

fileSystems."/" =

{ device = "/dev/disk/by-uuid/9d347599-a960-4076-8aa3-614bb9524322";

fsType = "ext4";

options = [ "noatime" "nodiratime" "discard" ];

};

fileSystems."/home" =

{ device = "/dev/disk/by-uuid/d4057681-2533-41b0-9175-18f134d7401f";

fsType = "ext4";

options = [ "discard" ];

};

I have enabled (online) discard on the encrypted filesystems as well as on the LUKS device (see below), also known as TRIM support. TRIM tells the SSD hardware which parts of the filesystem are unused and hence benefits wear leveling. However using discard in combination with encrypted filesystems makes some information (which blocks are unused) leak through the full disk encryption … an attacker might e.g. guess the filesystem type from the pattern of unused blocks. For me this doesn’t matter much but YMMV ;-)

So let’s continue and edit (read: add more stuff to) configuration.nix. First some general system configuration:

# Define on which hard drive you want to install Grub.

boot.loader.grub.device = "/dev/sda";

# Tell initrd to unlock LUKS on /dev/sda2

boot.initrd.luks.devices = [

{ name = "crypted"; device = "/dev/sda2"; preLVM = true; allowDiscards = true; }

];

networking.hostName = "faulobst"; # Define your hostname.

# create a self-resolving hostname entry in /etc/hosts

networking.extraHosts = "127.0.1.1 faulobst";

# Let NetworkManager handle Network (mainly Wifi)

networking.networkmanager.enable = true;

# Select internationalisation properties.

i18n = {

consoleFont = "Lat2-Terminus16";

defaultLocale = "en_US.UTF-8";

# keyboard layout for your Linux console (i.e. off X11), dvp is for "Programmer Dvorak",

# if unsure pick "us" or "de" :)

consoleKeyMap = "dvp";

};

# Set your time zone.

time.timeZone = "Europe/Berlin";

# Enable the X11 windowing system.

services.xserver.enable = true;

services.xserver.layout = "us";

services.xserver.xkbVariant = "dvp"; # again, pick whichever layout you'd like to have

services.xserver.xkbOptions = "lv3:ralt_switch";

# Use i3 window manager

services.xserver.windowManager.i3.enable = true;

Packages

Of course our Linux installation should have some software installed. Unlike other distributions, where you typically install software every now and then and thus gradually mutate system state, NixOS allows a more declarative approach: you just list all system packages you’d like to have. Once you don’t want to have a package anymore you simply remove it from the list and Nix will arrange that it’s not available any longer.

Long story short, you have this central list of system packages (which you can also modify any time later) you’d like to have installed and NixOS will ensure they are installed:

… here is my current selection:

environment.systemPackages = with pkgs; [

bc

bwm_ng

coreutils

curl

file

gitAndTools.gitFull

gnupg

htop

libxml2 # xmllint

libxslt

lsof

mosh

psmisc # pstree, killall et al

pwgen

quilt

tmux

tree

unzip

utillinux

vim

w3m

wget

which

zip

chromium

firefox

gimp

i3 i3lock i3status dmenu

inkscape

keepassx2

libreoffice

networkmanagerapplet networkmanager_openvpn

xdg_utils

xfontsel

# gtk icons & themes

gtk gnome.gnomeicontheme hicolor_icon_theme shared_mime_info

dunst libnotify

xautolock

xss-lock

xfce.exo

xfce.gtk_xfce_engine

xfce.gvfs

xfce.terminal

xfce.thunar

xfce.thunar_volman

xfce.xfce4icontheme

xfce.xfce4settings

xfce.xfconf

];

… and we need to configure our Xsession startup …

- I went with

xfsettingsd, the settings daemon from Xfce (inspiration from here) - NetworkManager applet, sitting in the task tray

-

xautolockto lock the screen (usingi3lock) after 1 minute (including a notification 10 seconds before actually locking the screen) -

xss-lockto lock the screen on suspend (including keyboard hotkey)

services.xserver.displayManager.sessionCommands = ''

# Set GTK_PATH so that GTK+ can find the Xfce theme engine.

export GTK_PATH=${pkgs.xfce.gtk_xfce_engine}/lib/gtk-2.0

# Set GTK_DATA_PREFIX so that GTK+ can find the Xfce themes.

export GTK_DATA_PREFIX=${config.system.path}

# Set GIO_EXTRA_MODULES so that gvfs works.

export GIO_EXTRA_MODULES=${pkgs.xfce.gvfs}/lib/gio/modules

# Launch xfce settings daemon.

${pkgs.xfce.xfce4settings}/bin/xfsettingsd &

# Network Manager Applet

${pkgs.networkmanagerapplet}/bin/nm-applet &

# Screen Locking (time-based & on suspend)

${pkgs.xautolock}/bin/xautolock -detectsleep -time 1 \

-locker "${pkgs.i3lock}/bin/i3lock -c 000070" \

-notify 10 -notifier "${pkgs.libnotify}/bin/notify-send -u critical -t 10000 -- 'Screen will be locked in 10 seconds'" &

${pkgs.xss-lock}/bin/xss-lock -- ${pkgs.i3lock}/bin/i3lock -c 000070 &

User Configuration

… and our system needs a user account of course :)

NixOS allows for “mutable users”, i.e. you are allowed to create, modify and delete user accounts

at runtime (including changig the user’s password). Contrary you can disable mutable users and

controlling user accounts from configuration.nix. As NixOS is about system purity I went with the

latter approach, so some more statements for the beloved configuration.nix file:

users.mutableUsers = false;

users.extraUsers.stesie = {

isNormalUser = true;

home = "/home/stesie";

description = "Stefan Siegl";

extraGroups = [ "wheel" "networkmanager" ];

hashedPassword = "$6$VInXo5W.....$dVaVu.....cmmm09Q26r/";

};

… and finally we’re ready to go:

$ nixos-install

… if everything went well, just reboot and say hello to your new system. If you’ve mis-typed

something fear not, simply fix it and re-run nixos-install.

After you’ve finished installation and rebooted into your new system you can always come back and

further modify configuration.nix file, just run nixos-rebuild switch to apply the changes.

Starting a local developer meetup

As Ansbach (and the region around it) neither has a vibrant developer community nor a (regular) meetup to attract people to share their knowledge, mainly @niklas_heer and me felt like having to get active…

Therefore we came up with the idea to host a monthly meetup named /dev/night at @Tradebyte office (from August on regularly every 2nd Tuesday evening), give a short talk to provide food for thought and afterwards tackle a small challenge together.

… looking for some initial topics we noticed that patterns are definitely useful to stay on track and that there are many good ones beyond the good old GoF patterns. And as both of us are working for an eCommerce middleware provider we came to eCommerce patterns … and finally decided to go with Transactional Patterns for the first meeting.

So yesterday @niklas_heer gave a small presentation on what ACID really means and why it is useful beyond database management system design (ever thought of implementing an automated teller machine? or maybe to stick with eCommerce what about fetching DHL labels from a web-service if you’re immediately charged for them? You definitely want to make sure that you don’t fetch them twice if two requests hit your system simultaneously). Besides he showed how to use two-phase commit to construct composite transactions from multiple, smaller ACID-compliant transactions and how this can aid (i.e. simplify) your system’s architecture.

As a challenge we thought of implementing a fictitious, distributed Club Mate vending machine, … where you’ve got one central “controller” service that drives another (remote) service doing the cash handling (money collection and provide change as needed) as well as a Club Mate dispensing service (that of course also tracks its stock). Obviously it is the controller’s task to make sure that no Mate is dispensed if money collection fails, nor should the customer be charged if there’s not enough stock left.

… this story feels a bit constructed, but it fits the two-phase commit idea well and also suits the microservice bandwagon :-)

Learnings

- the challenge we came up with was (again) too large – quite like last Thursday when I was hosting the Pig Latin Kata in Nuremberg … the team forming and getting the infrastructure working took way longer than expected (one team couldn’t even start to implement the transaction handling, as they got lost to details earlier on)

- after all implementing a distributed system was funny, even so we couldn’t do a final test drive together (as not all of the services were feature complete)

- … and it’s a refreshing difference to “just doing yet another kata”

- the chosen topic Transactional Patterns turned out to be a good one, @sd_alt told us that he recently implemented some logic which would have benefitted from this pattern

- one participant was new to test-driven development (hence his primary takeaway was how to do that with PHP and phpspec/codeception)

- this also emphasises that we should address developers not familiar with TDD in our invitation (and should try not to scare them away by asking to bring laptops with an installed TDD-ready environment with them)

- for visitors from Nuremberg 6:30 was too early, they ask to start at 7 o’clock

- all participants want us to carry on :-)

… so the next /dev/night/ is about to take place on September 13, 2016 at 7:10 p.m. The topic is going to be Command Query Responsibility Segregation pattern and Event Sourcing.

Pig Latin Kata

Yesterday I ran the Pig Latin kata at the local software craftsmenship meetup in Nuremberg. Picking Pig Latin as the kata to do was more a coincidence than planned, but it turned out to be an interesting choice.

So what I’ve prepared were four user stories (from which we only tackeled two; one team did three), going like this:

(if you’d like to do the kata refrain from reading ahead and do one story after another)

Pig Latin is an English language game that alters each word of a phrase/sentence, individually.

Story 1:

- a phrase is made up of several words, all lowercase, split by a single space

- if the word starts with a vowel, the transformed word is simply the input + “ay” (e.g. “apple” -> “appleay”)

- in case the word begins with a consonant, the consonant is first moved to the end, then the “ay” is appended likewise (e.g. “bird” -> “irdbay”)

- test case for a whole phrase (“a yellow bird” -> “aay ellowyay irdbay”)

Story 2:

- handle consonant clusters “ch”, “qu”, “th”, “thr”, “sch” and any consonant + “qu” at the word’s beginning like a single consonant (e.g. “chair” -> “airchay”, “square” -> “aresquay”, “thread” -> “eadthray”)

- handle “xr” and “yt” at the word’s beginning like vowels (“xray” -> “xrayay”)

Story 3:

- uppercase input should yield uppercase output (i.e. “APPLE” -> “APPLEAY”)

- also titlecase input should be kept intact, the first letter should still be uppercase (i.e. “Bird” -> “Irdbay”)

Story 4:

- handle commas, dashes, fullstops, etc. well

The End. Don’t read on if you’d like to do the kata yourself.

Findings

When I was running this kata at Softwerkskammer meetup we had eight participants, who interestingly formed three groups (mostly with three people each), instead of like four pairs. The chosen languages were Java, Java Script (ES6) and (thanks to Gabor) Haskell :-)

… the Haskell group unfortunately didn’t do test first development, but I think even if they would have they’d anyways have been the fastest team. Since the whole kata is about data transformation the functional aspects really pay off here. What I really found interesting regarding their implementation of story 3 was that they kept their transformation function for lowercase words unmodified (like I would have expected) but before that detected the word’s case and build a pair consisting of the lower case word plus a transformation function to restore the casing afterwards. When I did the kata on my own I kept the case in a variable and then used some conditionals (which I think is a bit less elegant) …

Besides that feedback was positive and we had a lot of fun doing the kata.

… and as a facilitator I underestimated how long it takes the pairs/teams to form, choose a test framework and get started. Actually I did the kata myself with a stopwatch, measuring how long each step would take as I was nervous that my four stories wouldn’t be enough :-) … turns out we spent more time exercising and didn’t even finish all stories.

Further material:

Serverless JS-Webapp Pub/Sub with AWS IoT

I’m currently very interested in serverless (aka no dedicated backend required) JavaScript

Web Applications … with AWS S3, Lambda & API Gateway you can actually get pretty far.

Yet there is one thing I didn’t know how to do: Pub/Sub or “Realtime Messaging”.

Realtime messaging allows to build web applications that can instantly receive messages published by another application (or the same one running in a different person’s browser). There even are cloud services permitting to do exactly this, e.g. Realtime Messaging Platform and PubNub Data Streams …

However recently having played with AWS Lambda and S3 I was wondering how this could be achieved on AWS… and at first it seemed like it really isn’t possible. Especially the otherwise very interesting article Receiving AWS IoT messages in your browser using websockets by @jtparreira misled me, as he’s telling that it wouldn’t be possible. The article was published Nov 2015, … not so long ago. But turns out it’s outdated anyways…

Enter AWS IoT

While reading I stumbled over AWS IoT which allows to connect “Internet of Things” devices to the AWS cloud and furthermore provides messaging between those devices. It has a message broker (aka Device Gateway) sitting in the middle and “things” around it that connect to it. It’s based on the MQTT protocol and there are SDKs for the Raspberry Pi (Node.js), Android & iOS … sound’s interesting, but not at all like “web browsers”

MQTT over Web Sockets

Then I found an announcement: AWS IoT Now Supports WebSockets published Jan 28, 2016.

Brand new, but sounds great :)

… so even when IoT still sounds strange to do Pub/Sub with - it looks like a way to go.

Making it work

For the proof of concept I didn’t care to publish AWS IAM User keys to the

web application (of course this is a smell to be fixed before production use).

So I went to “IAM” in the AWS management console and created a new user first, attaching

the pre-defined AWSIoTDataAccess policy.

So the proof of concept should involve a simple web page that allows to establish a connection to the broker, features a text box where a message can be typed plus a publish button. So if two browsers are connected simultaneously then both should immediately receive messages published by one of them.

required parts: … we of course need a MQTT client and we need to do AWS-style request signing in the browser. NPM modules to the rescue:

-

aws-signature-v4does the signature calculation -

cryptohelps it + some extra hashing we need to do -

mqtthas an MqttClient

… all of them have browser support through webpack. So we just need some more JavaScript

to string everything together. To set up the connection:

let client = new MqttClient(() => {

const url = v4.createPresignedURL(

'GET',

AWS_IOT_ENDPOINT_HOST.toLowerCase(),

'/mqtt',

'iotdevicegateway',

crypto.createHash('sha256').update('', 'utf8').digest('hex'),

{

'key': AWS_ACCESS_KEY,

'secret': AWS_SECRET_ACCESS_KEY,

'protocol': 'wss',

'expires': 15

}

);

return websocket(url, [ 'mqttv3.1' ]);

});

… here createPresignedURL from aws-signature-v4 first does the heavy-lifting for us.

We tell it the IoT endpoint address, protocol plus AWS credentials and it provides us

with the signed URL to connect to.

There was just one stumbling block to me: I had upper-case letters in the hostname

(as it is output by aws iot describe-endpoint command), the module

however doesn’t convert these to lower case as expected by AWS’ V4 signing process …

and as a matter of that access was denied first.

Having the signed URL we simply pass it on to a websocket-stream and create a new

MqttClient instance around it.

Connection established … time to subscibe to a topic. Turns out to be simple:

client.on('connect', () => client.subscribe(MQTT_TOPIC));

Handling incoming messages … also easy:

client.on('message', (topic, message) => console.log(message.toString()));

… and last not least publishing messages … trivial again:

client.publish(MQTT_TOPIC, message);

… that’s it :-)

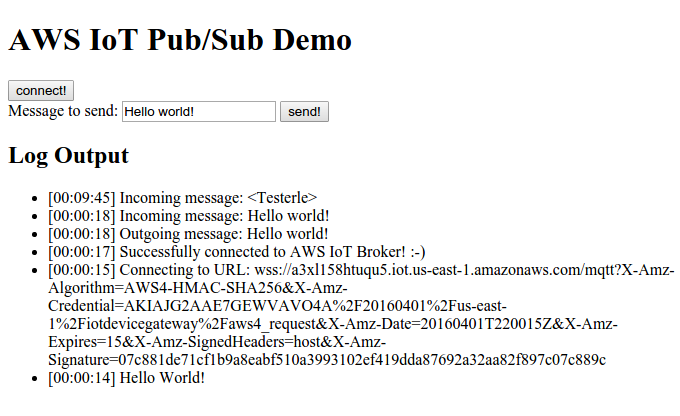

My proof of concept

here’s what it looks like:

… the last incoming message was published from another browser running the exact same application.

I’ve published my source code as a Gist on Github, feel free to re-use it.

To try it yourself:

- clone the Gist

- adjust the constants declared at the top of

main.jsas needed- create a user in IAM first, see above

- for the endpoint host run

aws iot describe-endpointCLI command

- run

npm install - run

./node_modules/.bin/webpack-dev-server --colors

Next steps

This was just the first (big) part. There’s more stuff left to be done:

- neither is hard-coding AWS credentials into the application source the way to go nor is publishing the secret key at all

- … one possible approach would be to use the API Gateway + Lambda to create pre-signed URLs

- … this could be further limited by using IAM roles and temporary identity federation (through STS Token Service)

- there’s no user authentication yet, this should be achievable with AWS Cognito

- … with that publishing/subscribing could be limitted to identity-related topics (depends on the use case)